My experience at the NVIDIA AI Conference, Mumbai

Quickly gathering a few thoughts and summarising my experience at NVIDIA AI Conference held at the Jio World Convention Center, Mumbai

[Note: A part of the content was modified with AI for more concise writing- try and guess which part :p]

My personal experience with AI has been viewing it from the outside, looking at it with awe and intrigue. While I had brushed with machine and deep learning from my time in college, I hadn’t had hands-on involvement with the new paradigm which was set from late 2022, when OpenAI changed the world by introducing a chat interface for their LLM model Chat-GPT3. Ever since then its been a race to the moon, with AI being hyped as the next big thing and possibly changing the way we live and what it means to have purpose in this new world. While my thoughts on what my experience and relationship with AI (both an enthusiast and a skeptic) would be worth a different blog, I have been fascinated by how NVIDIA have taken the opportunity to establish themselves as a market leader by powering the latest AI bull run (borrowing this term from the markets). They are essentially providing the shovels while everyone is chasing the gold that seems to have been promised. And when they announced that they were hosting a conference to bring to light the latest in AI, I was intrigued enough to give it a shot and see where we were headed towards. While I hadn’t been that active in working with LLMs at a deep level, I have been keeping up to date with AI developments at a high level and thought more on the philosophical side, seeing what impact it would have on our economy and society.

Thus it was in the backdrop of having seen AI from the perspective of what it might turn out to be that I attended the conference, seeking to glean in on how India and NVIDIA saw the future of AI. As it turns out, I got to see more about how we got to where we are today, and how people could utilize it to make smaller scale yet impactful solutions to the problems that we face today.

The first day of my three day journey at the Jio World Convention Center in Bandra-Kurla Complex, Mumbai was a workshop titled “Rapid Application Development Using Large Language Models (LLMs)” by Mohit Sewak, who is the Head of AI Researcher and Developer Relations, South Asia, at NVIDIA and has been involved in academia with a PhD in Applied Artificial Intelligence from BITS, Pilani and having multiple patents to his name. Over the course of the day, we went through what essentially was the history of LLMs, starting with how deep learning evolved to what we got today.

We began by exploring the fundamentals of deep learning, focusing on how language had to be represented in ways that machines could understand, specifically through vector representations—essentially transforming words into mathematical forms like matrices (a callback to high school math). This led to an examination of Transformer architectures, a neural network design that paved the way for models like BERT (Bi-directional Encoder Representations from Transformers). BERT is highly effective at understanding and extracting meaning from text but is limited in its ability to generate new text, a task better suited to decoder-based architectures.

Large Language Models (LLMs) were developed by combining encoder and decoder components, creating systems capable of generating coherent text based on the given context, such as user prompts. Advancements also allowed models to handle multiple modalities (e.g., text, image, video) by storing information in shared vector spaces, enabling multi-modal capabilities.

Finally, as industries and enterprises increasingly seek to harness AI, agentic workflows within Retrieval-Augmented Generation (RAG) have become essential in building enterprise LLMs. RAG allows models to access and retrieve specific, relevant domain knowledge in real-time, without requiring constant retraining. Agentic workflows empower these models to perform specialized roles, such as customer support, research, and knowledge management, by acting as 'agents' tailored to specific enterprise workflows. This combination of RAG and agentic workflows enables the creation of intelligent LLM-based agents that are not only contextually relevant but also capable of adapting to dynamic information needs, providing accurate and role-specific responses in enterprise settings.

Mr. Sewak provided these insights in a fast paced yet understandable fashion and this workshop opened my eyes on how we got to where we are and piqued my curiosity to go back to the drawing board and study more about what is behind all the chats that we have with LLMs and study them from scratch. I will hope to add more content going more in depth on the technical side in the coming weeks after revisiting the material and doing some exploring on my own.

The second day started with a Tirupati-style procession to the main hall to catch a fireside chat with the man who is the rockstar face of NVIDIA, the person whose charisma could be felt even further away from the podium he occupied. Jensen Huang, who has been at NVIDIA for 31 years now, put on a show for the audience despite having just come into the country eight hours prior to his presentation.

With humor sprinkled across his big pitch on how NVIDIA was ready to transform the physical AI space through its Omniverse offering, Huang looked like he was on form as he went through how developing AI would be the future and how India would have to transition from exporting software as our country has done for most of the 21st century and leverage the growing AI infrastructure and stacks to develop products and services for the country and for the world.

Huang started with updates on the cutting edge Blackwell chips which would be made available for use in Q4, after media reports suggested that it had faced a setback in developing the same. He then moved to the Omniverse, which would help robotics in a major way by simulating the virtual environment in which AI robots could train themselves before going to the real world.

The whole crowd was waiting in anticipation of the arrival of Mukesh Ambani, but it was Akshay Kumar, a famous Bollywood actor who showed up instead. While the AI talk cooled down, with the two chit chatting about martial arts (Huang’s two children are black belt holders in Karate as is Akshay) and talking about how they don't age. But Huang’s reply to Akshay’s question on what a human could do which an AI couldn’t interested me. Huang gave a reply which is now common across a major section of the AI hype industry that AI can do maybe twenty, maybe fifty percent of our work but never the whole- and hence we should adopt AI as our copilot to get more work done. That if work could be reduced by the help of AI and agentic frameworks would reduce the overall demand for human labor seems to be a point that’s always lost with these answers but I digress.

Another point I found interesting from Huang’s speech was how his ideology is consistent with his point that software engineers aren’t needed in the new paradigm that NVIDIA is spearheading. He provided two diagrams, software 1.0 where developers provided the algorithms to turn inputs to outputs and software 2.0 where the machine would learn the inputs and outputs to create the algorithm which would then be validated on new inputs to produce outcomes. This sits consistently with his assertion that software development as it is would be outdated in the years to come.

Mukesh Ambani came in eventually, and as expected there was a lot of talk about how India would be the next big destination for AI, with NVIDIA and Reliance announcing a collaboration to bring chip manufacturing to India, and NVIDIA increasing their presence in India. With over ten thousand engineers in India, NVIDIA sees India as a place with a lot of unique use cases like the diversity of languages across the country as challenges, if solved, would help solve challenges all over the world. Ambani also took time out to thank Mark Zuckerberg for open sourcing LLMs (referring to Llama models) as it would help the Indian ecosystem develop solutions for India in the long term.

Ambani linked Nvidia to Vidya, while Huang remarked that without the V, Nvidia could be rearranged to India showing how India would be critical to the Nvidia growth story. Platitudes aside, there was a lively exchange between the two on how they would take their partnership forward.

Huang remarked how PM Modi had him present on AI before the cabinet in 2018, and how the PM wanted India to emerge as a major AI hub exporting things of high value, drawing a comparison on India shouldn’t export wheat to import bread. The idea was that India should be at the forefront on everything from chips to AI software and the world should be exporting from India.

This fireside chat would segue well into the first session I attended which was “IndiaAI Mission—Making AI in India for India” by Abhishek Singh, who is a career civil servant and currently involved with MeITY and works with IndiaAI mission. This showed how the government was intent on getting India involved in the AI wave and highlighted how India had almost all the requirements to be a leader in the space with a lot of AI talent, people trained in AI relative to the rest of the world as well as a market of 900 million internet users who could possibly adopt the new AI applications. However we currently lack the infrastructure to be truly self sufficient and create an AI ecosystem from the chip to application level.

Other challenges include developing indigenous foundational models built on Indian languages, creating quality and diverse datasets suited to the Indian context, as well as ramping up access to compute infrastructure to meet the demands of AI applications and finally, developing guardrails and governance frameworks to ensure the AI applications are safe to use. He also highlighted the pillars on which these challenges would be tackled and how they are being handled currently.

On a similar vein, I also visited a panel with distinguished people from academia, governance and industry weighing on what it truly means to have Sovereign AI for India. The panel was in agreement that the models that we currently access do not truly represent the lived experiences of Indians perhaps due to the relevant local datasets not being involved. One professor remarked about how he had requested for a B.Tech for AI a few years ago but was laughed off in the circles around him- but today we now have M.Tech in AI as an offering which goes to show how AI has become important as a skill set to acquire. They also spoke of the need for AI to be democratised and everyone needing to access it.

One successful use case highlighted was how cases in lower courts which were recorded in local languages were translated to English so that the higher courts could get access to more cases which were otherwise hidden due to being in a different language. In a similar fashion, many other fields could be positively impacted. Voice mode was suggested as a way to bring the next 500 million Indians to the internet as people still find accessing the Internet difficult in the current format. Machine Unlearning or helping people with removing PII (Personally Identifiable Information) from LLMs was also highlighted as a crucial issue to tackle.

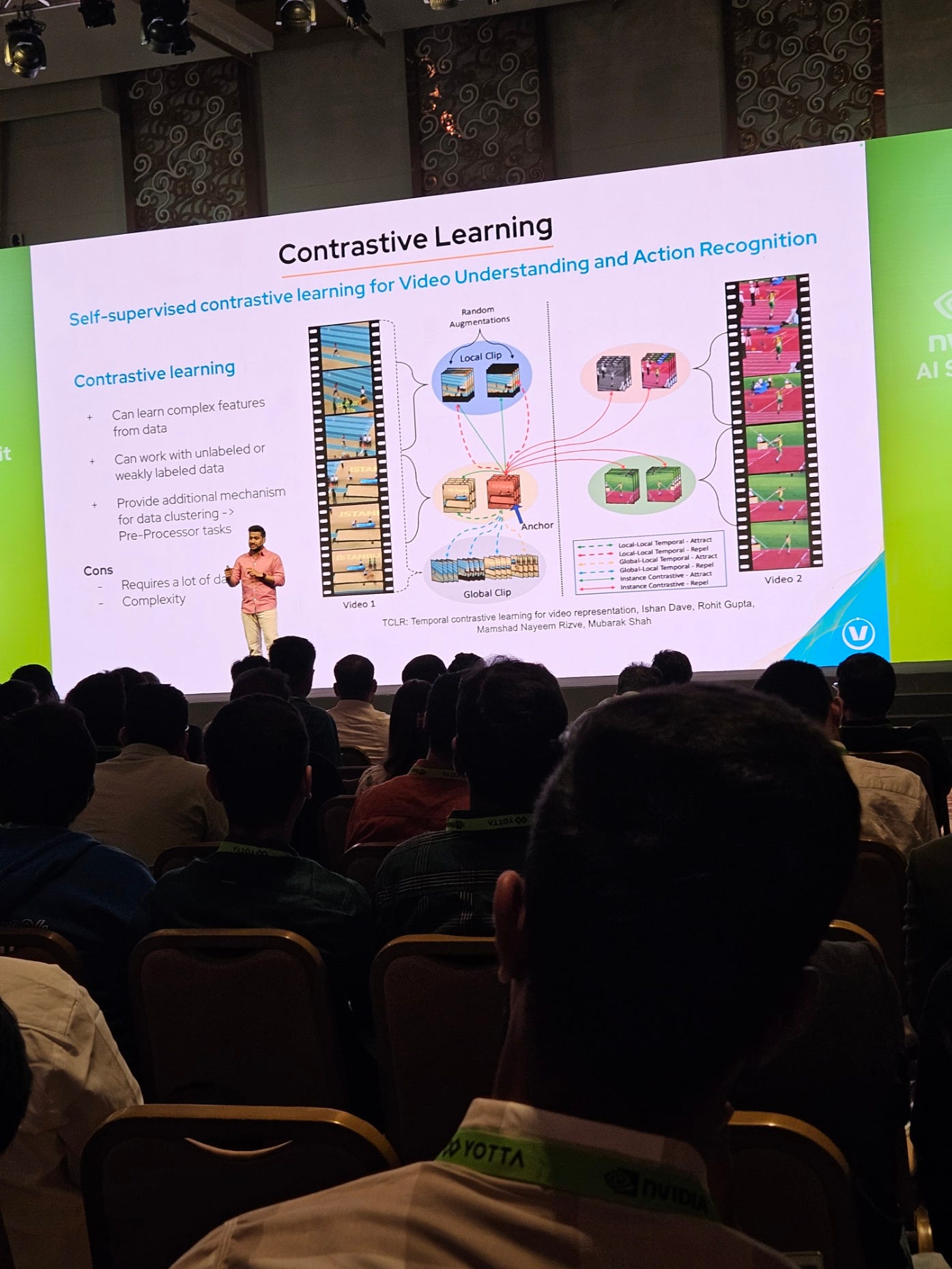

From governance to industry, I witnessed a few talks about how AI could be leveraged to solve problems for industry as well as for society. A talk I found interesting was “A Multimodal Approach to Understanding Game-Changing Moments in Sports” by Om Prakash Shanmugam of Videoverse. He gave a slightly deep walk through on how they leveraged video datasets to identify the key moments from a sports match (cited IPL as one of their clients) and arrange them and neatly package them into highlights for different social media which would have different requirements. I hadn’t realized even this was automated but marveled at how they went through the problem statement and identified ways to get around it.

Another use case was presented from a business perspective, with the idea being to automate insurance calls for American clients. This talk featured the usage of RAGs and query optimisers to effectively utilise the domain context and various agents in the workflow to ensure the relevant information is presented to the user on call and not just give generic information which wouldn’t be useful for the user. NVIDIA also presented a talk on how they were able to improve weather forecasting with the help of their cutting edge GPUs to get a picture of how weather dynamics operated at a granularity of 1.5km above sea level instead of the standard 25km. Ominverse was also used to capture the world in greater detail.

There were also some more technical discussions on challenges like model alignment for safety purposes, dealing with the requirement for data for LLMs by generating synthetic data, as well as accelerating robotics development by creating a virtual environments with real world physics for the robots to do their training, but as they delved more into NVIDIA based solutions for these problems, I will just leave it as a summarized version for now.

I would try to have a blog with more technical depth on these talks and workshops by going through the materials and going down the rabbit holes in the coming weeks, so stay tuned for that! Also while I didn’t network too much this time around, I could feel the energy with people from diverse backgrounds from tech to business to sales to designers all coming and seeking to learn as well as lend their expertise and feed off each other’s enthusiasm.

But overall, this conference was an eye opener for me in terms of where we are in the AI field and while I still have my reservations about how certain entities like OpenAI are progressing with seemingly little care for the societal impacts, this conference served as a reminder of where we are in the present as well as the short to medium term as opposed to the long term vision being sold in terms of what AI can bring to the table.

Valuable insights from the conference, well written!